Haptics

Introduction

Haptics refers to the technology of touch. What computer graphics is to users' sense of sight, haptics is to their sense of touch. Typically, haptics is used to provide force and tactile feedback about objects in a virtual environment. This displays features about an object which may otherwise been unobservable-- roughness, elasticity, softness, etc...

The applications of haptic technology are widespread. Developers in areas such as medicine, media and entertainment, computer-assisted prototyping, arts and culture, and e-commerce are already using haptics to enhance their products or projects.

The HaptiCast project uses haptics to increase the level of immersion-- and thus the entertainment value -- of a 3D video game.

Haptic Rendering

Haptic rendering is the process of calculating and sending forces values to be displayed at a haptic device. Typically, penalty-based methods are used to display a force to the user based on some distance or penetration depth.

The positions of one or more haptic interface points (HIP) are tracked by the haptic device. Collision of this point with objects in the virtual environment is detected and measured by the controlling software. Based upon the penetration depth of the collision point, as well as some physical properties about the objects, a force value is calculated and displayed at the haptic device. Quite often, a modified version of Hooke's spring law is used to generate the force value and this collision detection-collision response pairing is common to many haptic rendering algorithms.

"Lift" Wand Rendering

The rendering algorithm used by the lift wand is simple, efficient, and stable. These are important factors for any force rendering algorithm employed by a haptic game.

When the user selects (picks up) an object using this wand, they are effectively choosing their HIP. Each object in the game world has a collision geometry and some physical characteristics (mass, interia, softness, ...) associated with it. The physics engine uses this information to simulate the dynamics of the object within the game world as it interacts with other objects in the environment.

The lift wand takes advantage of the physics engine's ability to perform collision detection and response by placing the virtual HIP location at the center of the selected object and using forces to move and animate the object within the game world. These forces are generated by the haptic rendering equation.

There are two revelant properties about the HIP object which are used by the rendering equation- position and velocity. The position of the virtual HIP is tracked by the rendering software. This position is compared to the object's location (relative to the user's viewpoint) and if there is a difference between the position, a force vector is generated in the direction of the virtual HIP location. This force is then applied to the selected object and displayed to the user at the haptic device. If the force is large enough, it should move the object towards the virtual HIP location until they occupy the same point. Think of the virtual HIP location as a "setpoint" and the force equation as a P-controller which aims to reach that point.

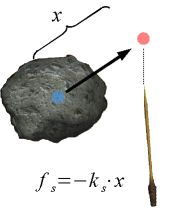

The part of the rendering equation which uses positon information is shown in the figure below:

|

Using just the position for displaying haptic effects is okay, but users won't feel the impulse forces generated by collision of the object with the other objects in the environment. That's where velocity comes in. When an object collides with another in the environment, there's a sudden change in velocity. This is felt by the user as an impulse.

The software component which controls rendering for the lift wand tracks the velocity of the HIP object and checks, at each time step, if there has been a sudden change in the magnitude or direction of the velocity. If there has been, this change is measured, scaled, and displayed to the user at the haptic device. There is no need to apply this force to the selected object since this is handled by the physics engine.

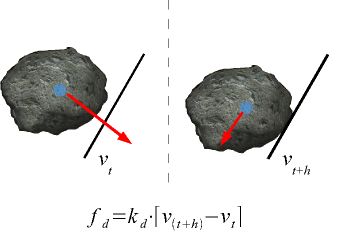

Below is the rendering equation used for displaying impluse forces to the user:

|

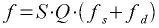

The two forces calculated in the equations above are combined, transformed, and scaled to create a single rendering equation for the lift wand:

|

where f is the force rendered at the haptic device, S is a scaling matrix, and Q is a transformation matrix from the game world frame to the haptic device frame.